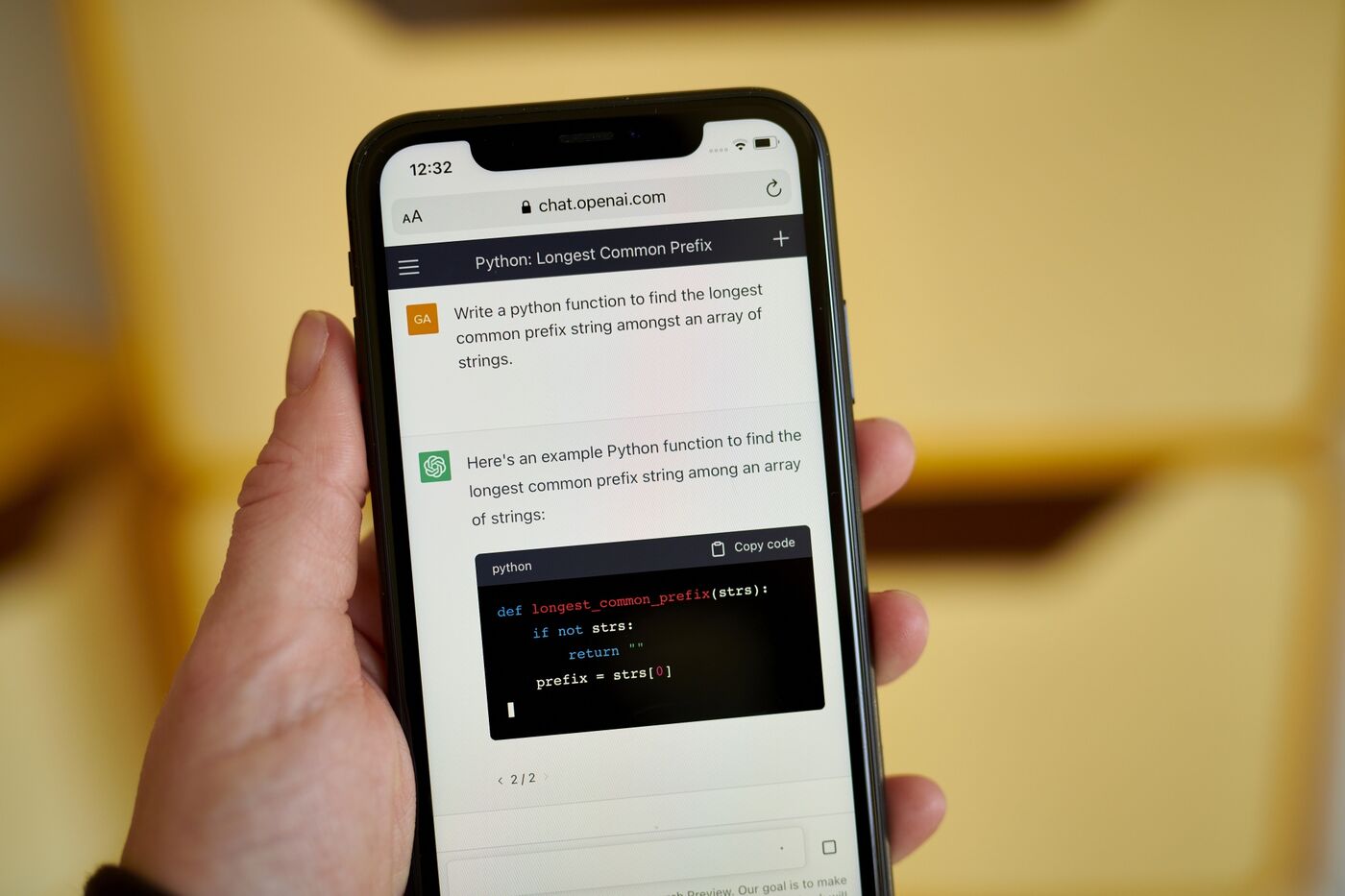

A replacement for an artificial intelligence tool that gave rise to the well-known services ChatGPT and Dall-E and sparked fierce competition among tech companies in the field of generative AI is being introduced by OpenAI. The new technology, known as GPT-4, is said to be more exact, creative, and cooperative. Microsoft Inc which has invested more than $10 billion in OpenAI, said the new version of the AI tool is powering its Bing search engine. Developers can sign up to use OpenAI's paid ChatGPT Plus subscribers' access to GPT-4, also known as the generative pre-trained transformer 4 (or GPT-4), to create applications. The tool is "40% more likely to produce factual responses than GPT-3.5 on our internal evaluations," according to OpenAI, which stated this on Tuesday. The updated version supports text and image queries as well so a user can submit a picture with a related question and ask GPT-4 to describe it or answer questions.

The Dall-E image-generation tool and the chatbot ChatGPT, two products that captured the public's attention and inspired other tech companies to pursue AI more aggressively, were made using GPT-3, which was released in 2020 along with the 3.5 version. Since then, speculation about whether the following model will be more capable and possibly able to handle more tasks has grown. According to OpenAI, Stripe Inc. uses GPT-4 while Morgan Stanley uses GPT-4 for data organization. a provider of electronic payments, is evaluating whether it can aid in the fight against fraud. Company for language learning Duolingo Inc. is among the other clients. Icelandic authorities, the Khan Academy, and. A company called Be My Eyes develops tools for people who are blind or have low vision, also using the software for a virtual volunteer service that lets people send images to an AI-powered service, which will answer questions and provide visual assistance.

The new version, according to him, is better at finding specific information in corporate earnings reports and answering questions about intricate sections of the US federal tax code. In other words, it is better at searching through "dense business legalese" to find information. Because it was trained on data that, for the most part, existed before September 202, GPT-4, like GPT-3, cannot make inferences about current events. GPT-4 is an example of what is referred to as a large language model, a category of AI system that examines enormous amounts of online writing to learn how to produce text that sounds human. In recent months, the technology has generated both excitement and controversy. Text-generation systems can reinforce biases and false information, which is another concern that goes beyond the worry that they will be used to cheat on schoolwork. GPT-2 was first released by OpenAI in 2019, but due to worries about malicious use, only a portion of the model was made available to the public. Large language models have occasionally been found to veer off topic or engage in offensive or racist speech, according to researchers. Concerns have also been expressed about the carbon emissions brought on by all the computing power required to build and maintain these AI models. Making the artificial intelligence software safer, according to OpenAI, took six months. For instance, the final version of GPT-4 is better at handling inquiries about how to make a bomb or where to find cheap cigarettes; in the latter case, it now provides a warning about the negative effects of smoking as well as potential ways to save money on tobacco products.

The company declined to provide specific technical information about GPT-4 including the size of the model. The company's president, Brockman, stated that OpenAI anticipates cutting-edge models will be created in the future by businesses investing in billion-dollar supercomputers, and some of the most cutting-edge tools will have risks. To start, OpenAI wants to keep some aspects of their work a secret. “some breathing room to really focus on safety and get it right.” In the field of artificial intelligence, it is a contentious strategy. More transparency and making the AI models available to the general public, according to some other businesses and experts, will improve safety. In addition, OpenAI stated that while it does not reveal all model training information, it does share more information about its efforts to eliminate bias and improve the product.

The announcement is one of numerous AI-related ones from OpenAI, Microsoft, and rivals in the nascent market. New chatbots, AI-powered search, and creative ways to incorporate the technology into business software designed for salespeople and office workers have all been released by companies. GPT-4 was trained on Microsoft's Azure cloud platform, like other recent models from OpenAI. Google-backed Anthropic, a startup founded by former OpenAI executives, announced the release of its Claude chatbot to business customers earlier Tuesday. Alphabet Inc.’s Google, meanwhile, said it is giving customers access to some of its language models, and Microsoft is scheduled to talk Thursday about how it plans to offer AI features for Office software. When AI programs produce content that resembles already-existing content, concerns about copyright and ownership arise. These concerns also center on whether these systems should be permitted to use other people's programming, writing, and artistic creations for training purposes. OpenAI, Microsoft, and competitors are the targets of lawsuits.

OpenAI said Tuesday the tool is “40% more likely to produce factual responses than GPT-3.5 on our internal evaluations.” “We’re really starting to get to systems that are actually quite capable and can give you new ideas and help you understand things that you couldn’t otherwise,” said Greg Brockman, president and co-founder of OpenAI. In a January interview, OpenAI Chief Executive Officer Sam Altman tried to keep expectations in check. “The GPT-4 rumor mill is a ridiculous thing,” he said. “I don’t know where it all comes from. People are begging to be disappointed and they will be.” The company’s chief technology officer, Mira Murati, told Fast Company earlier this month that “less hype would be good.” “GPT-4 still has many known limitations that we are working to address, such as social biases, hallucinations and adversarial prompts,” the company said Tuesday in a blog, referring to things like submitting a prompt or question designed to provoke an unfavorable action or damage the system. “We encourage and facilitate transparency, user education and wider AI literacy as society adopts these models. We also aim to expand the avenues of input people have in shaping our models.”

“We have actually been very transparent about the safety training stage,” said Sandhini Agarwal, an OpenAI policy researcher.

Bloomberg